In part one of this series, I introduced in general about Text-to-Image model in Machine Learning and implemented a demo of Stable Diffusion in Google Colab. In this part two, I will talk about two other common and powerful platforms in the Text-to-Image world, which are Midjourney and DALL-E 2. Finally, I will compare the output images generated from those two platforms with the output of Stable Diffusion in part one and recap by sharing some of my thoughts.

Midjourney

About Midjourney

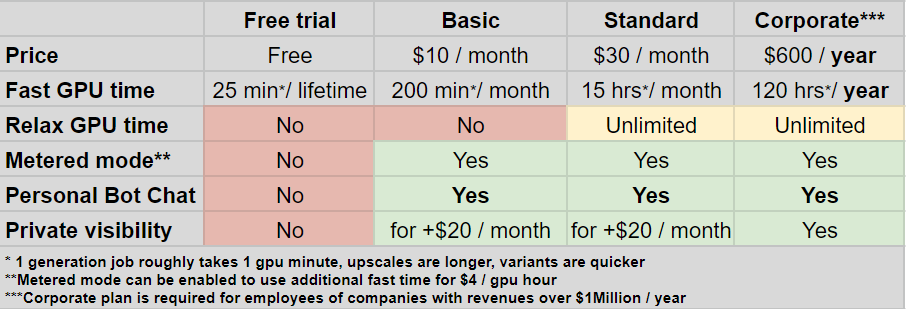

Midjourney is an independent research lab which is led by David Holz - who is a talented researcher in Computer Vision, Applied Math, and Physics. His Google scholar profile also shows that he has hundreds of publications and thousands of citations in those fields. Currently, Midjourney lets users try with free tier first, then offers paid tiers with greater capacity, faster computation, and additional features. We will learn how to use Midjourney Discord bot in the next section.

Generate images in Midjourney Discord channel

You can try with Midjourney Discord bot at this link. Otherwise, Midjourney also has the option to run Midjourney in your Discord server, you can check this link. In this demo, I use Midjourney Discord bot.

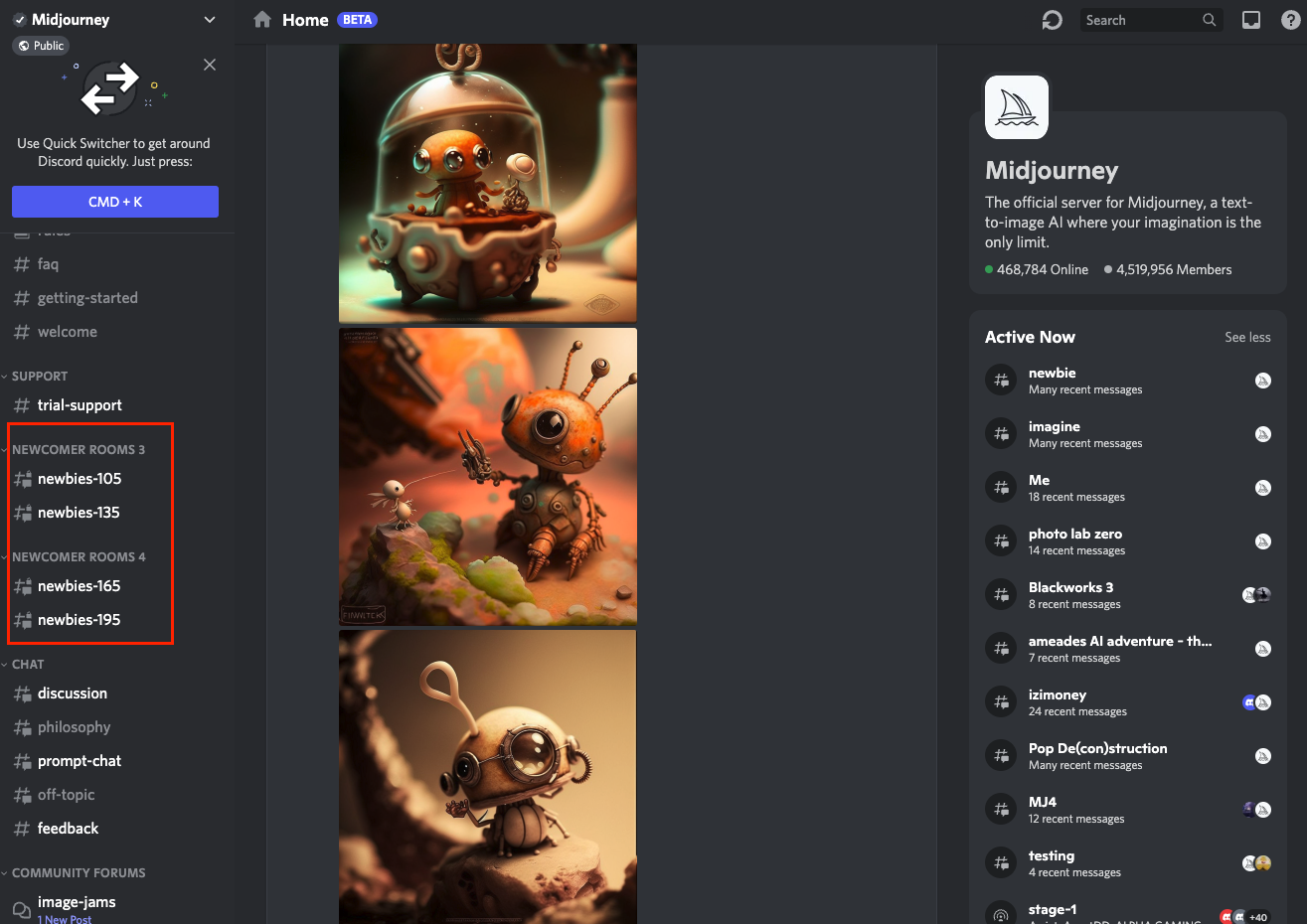

After logging in with the link above, you can see channels like this.

Then you can choose the channel with the prefix newbies-. When you are reading this blog, the channel numbers may differ. In this case, I chose the channel newbies-165.

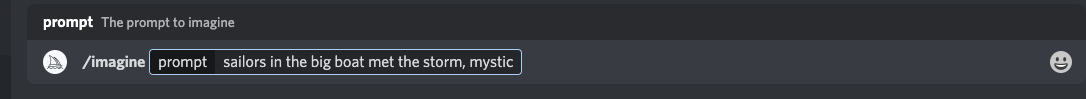

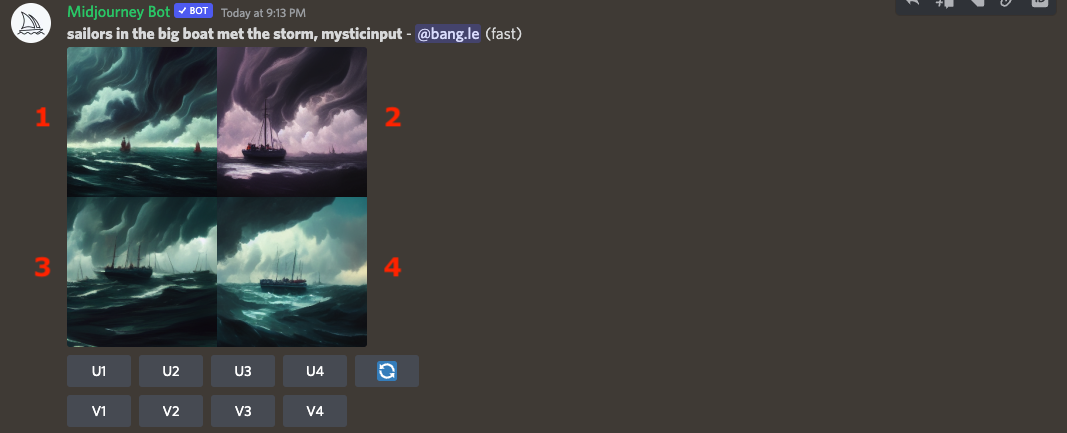

Then, in the Message box, you can start generating the images by using the command /imagine. I use the same input as part one “sailors in the big boat met the storm, mystic”.

Then hit Enter, and you will see the bot generating the images.

The complete output is something like this.

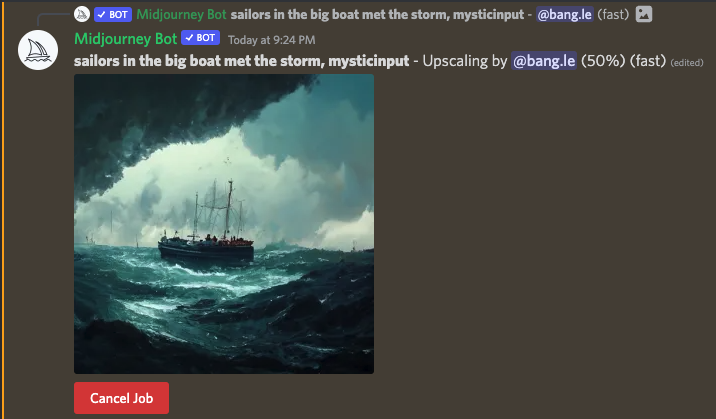

From here, you will have some options to do next. There are four images generated, with the order as above. If you like any of them, you can choose the U, which means upscale to increase the solution, so that the image has better quality. I will try upscaling the 4th image by clicking the U4.

The upscaled image has better quality and higher resolution.

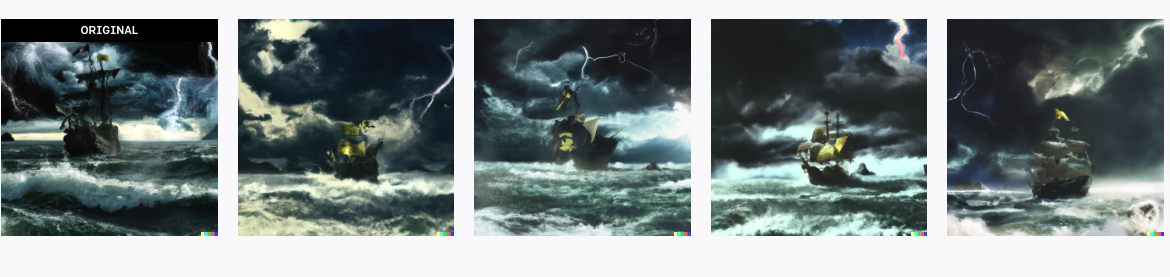

And if you do not like the generated images above. You can choose V to make variations from those images. Let’s try with V1.

Back to the input prompt above, Midjourney supports some parameters for different scenarios, the list of parameters can be found here. The parameters that I usually use are:

--seed: Sets the seed, which can sometimes help keep things more steady and reproducible when trying to generate a similar prompt again.--video: Saves a progress video, which is sent to you in the ✉️-triggered DM (you must react with the envelope to get the video link).

DALL-E 2

About DALL-E 2

DALL-E and DALL-E 2 are two deep learning models developed by OpenAI - an AI research lab founded in San Francisco in late 2015 by Elon Musk and others (but he resigned from the board in February 2018). DALL-E first appeared in January 2021. Next year, in April 2022, DALL-E 2 was officially announced, which was believed could generate images with higher quality and “can combine concepts, attributes, and styles”. The same as Midjourney, DALL-E 2 also comes with the [freemium] business. With the free tier, you can try most of the wonderful features, but of course with paid tiers, there are much more. You can check the pricing here.

Currently, there are two ways you can try DALL-E 2: calling API with documentation here or generat

Generate images in OpenAI website

The first thing you need to do is register an account. Unfortunately, the supported countries by OpenAI are limited, you can check the list here. A quick tip if your place is not on the list is to use VPN and a temporary phone number to receive OTP (but I do not recommend this).

After logging in, you can see the page like this.

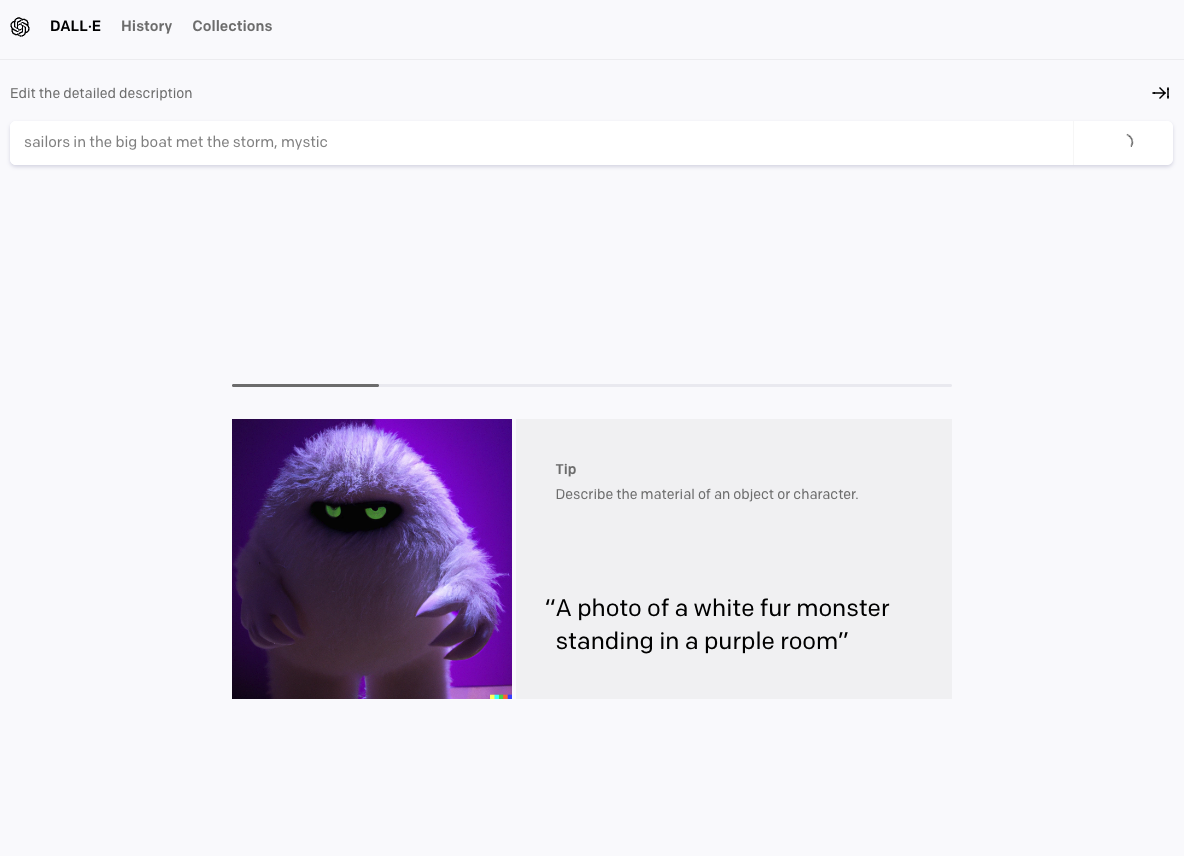

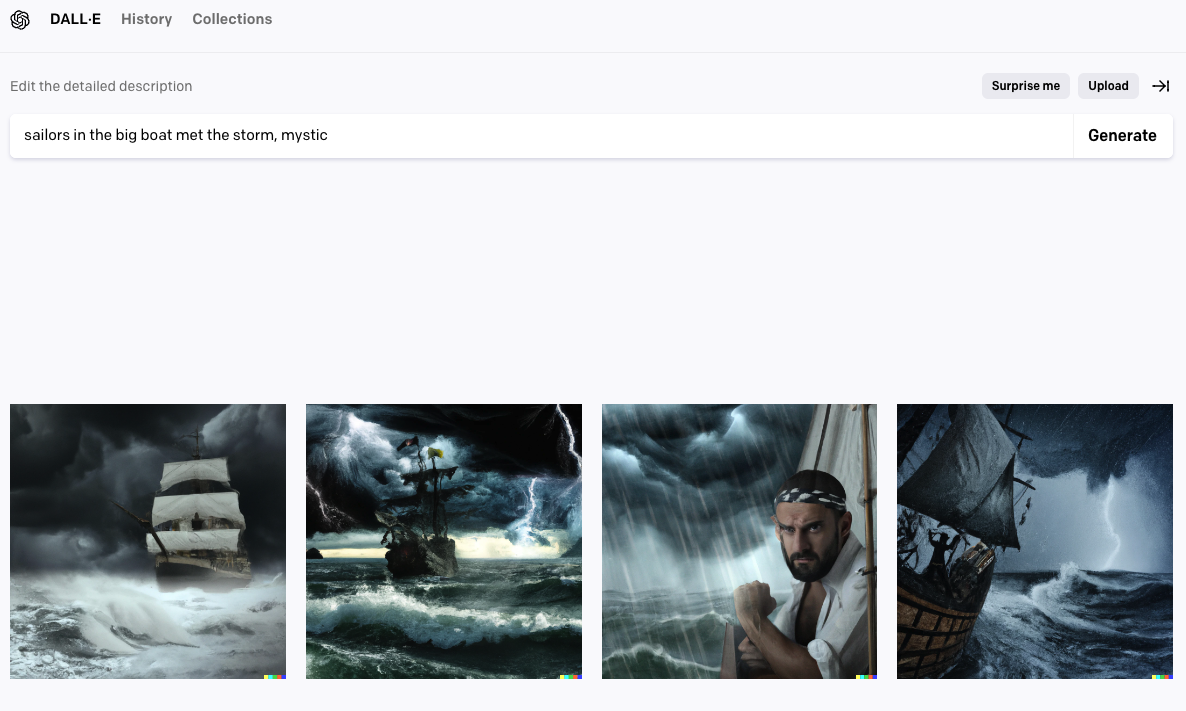

Then, you can input the prompt you want. In this case, I will use the same input as I used for Stable Diffusion and Midjourney, which is “sailors in the big boat met the storm, mystic”.

And these are the generated images.

This is one of the images in full size.

Otherwise, you can also make variations from one of the images generated the first time. You will have something like this.

Comparision and thoughts

Compare images between Stable Diffusion, Midjourney, DALL-E 2

With the same input “sailors in the big boat met the storm, mystic”, I used Stable Diffusion, Midjourney, and DALL-E 2 to generate the images. Let’s check the outputs again.

Below is the output of Stable Diffusion:

This is the output of Midjourney:

And this is the output of DALL-E 2:

In my opinion, I think all those three softwares are great to generate images. Moreover, they have other awesome features that I still do not have the chance to try. They are the pioneers in the Text-to-Image world and can be the game changers in terms of Machine Learning/AI applications.

Each of them has a special thing: Stable Diffusion is open-source, which I appreciate. This will help lots of industry guys like me understand deeply how those ML algorithms and models work. On the other hand, Midjourney and DALL-E 2 have powerful models to make their images artistic. With Midjourney, you can use Discord Bot, which is quite convenient for anybody to come and try. And DALL-E 2 has APIs which are developed by OpenAI, which you can easily integrate with your applications.

My thoughts about Text-to-Image

While doing this series, I also got some thoughts on the other side about the concern with Text-to-Image and AI in general. Although Text-to-Image and its implementation like Stable Diffusion, Midjourner, or DALL-E 2 are great for anyone who wants to create their unique images, there are concerns like:

- Is there any chance they will replace the artists in the future? The cost to generate those unique images is very cheap (e.g: 10$ for 100 images with Midjourney), especially when compared with hundreds of dollars for a painting.

- The NSFW contents.

I think this is also the same problem with new technology. The technology itself is not bad, they are the result of thousands of hard-working hours from experts to create something that can make human life better. But there are still chances that bad guys will take advantage of it and we need to think about a system to control or at least be aware of it if that happens.

Conclusion

In this blog, I introduced the two pioneers’ names in the Text-to-Image world, Midjourney and DALL-E 2. I also generated images from them, compared them with Stable Diffusion in part one, and shared my thoughts about concerns with Text-to-Image.

Many thanks for your time reading this. If you have any questions, or suggestions about my blog, please reach out to me via Linkedin or email on my homepage.